TABLE OF CONTENTS

Experience the Future of Speech Recognition Today

Try Vatis now, no credit card required.

The ability to converse with machines, once a staple of science fiction, is now woven into the fabric of our daily lives. This modern marvel is powered by Automatic Speech Recognition (ASR), a technology that transforms spoken words into written text. But how does this seemingly magical process actually work? Let's delve into the intricacies of the ASR pipeline and explore the key technologies driving its evolution.

How Automatic Speech Recognition (ASR) Works

Today, there are two primary approaches to Automatic Speech Recognition:

1. Traditional Hybrid Approach Overview

For over fifteen years, the traditional hybrid approach to Automatic Speech Recognition (ASR) has been the industry standard, combining multiple distinct components. While backed by extensive research, this approach faces challenges in accuracy, adaptability, and complexity.

How It Works:

Acoustic Feature Extraction: Teaching Machines to "Hear"

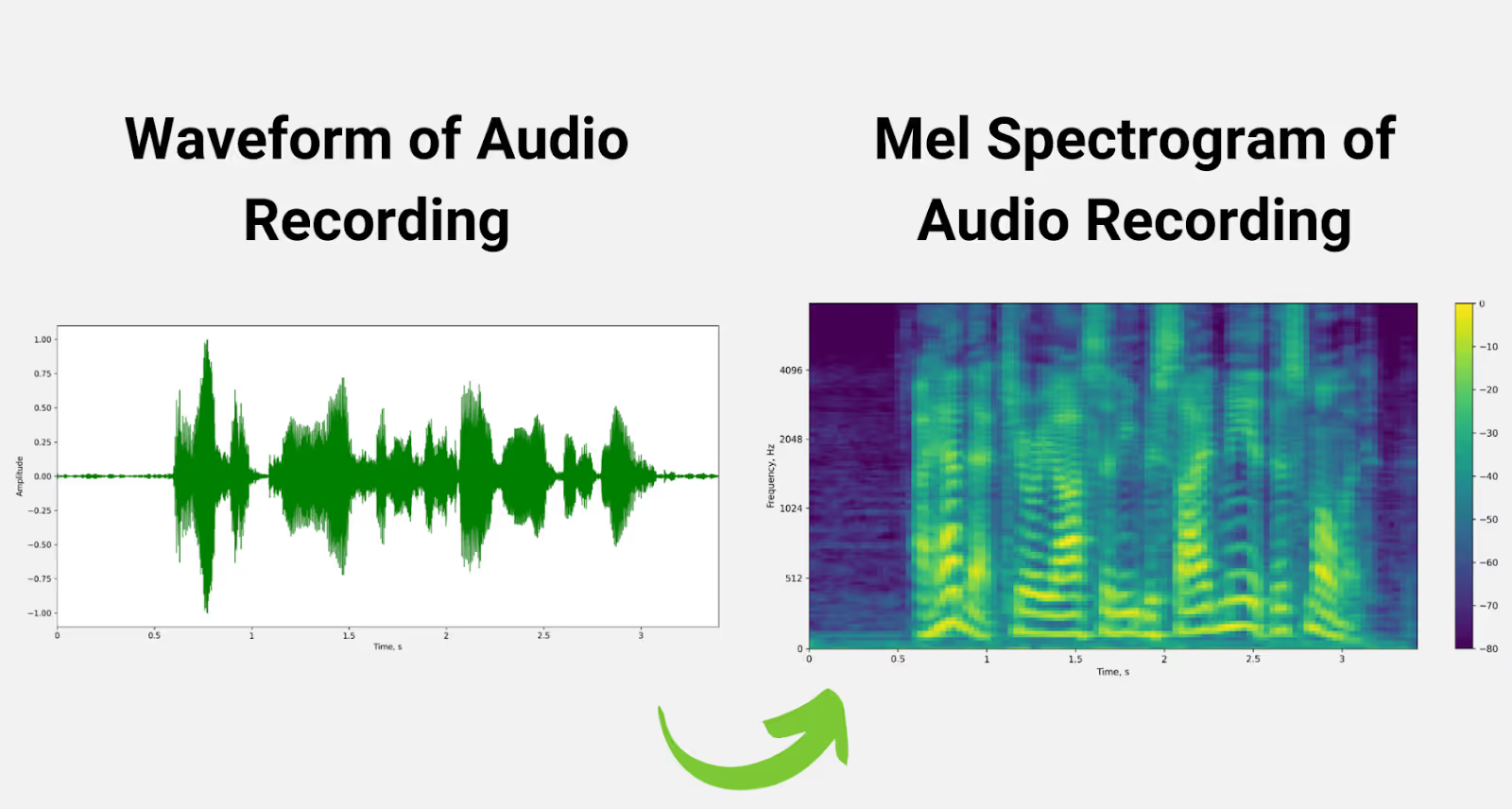

The process begins by converting raw audio signals into numerical representations that machine learning models can interpret. Techniques like Mel-Frequency Cepstral Coefficients (MFCCs) and Mel Spectrograms analyze speech signals, focusing on frequencies most relevant to human hearing. These features act as a "fingerprint," capturing unique patterns that distinguish one sound from another.

Example: A raw audio waveform of someone saying, "Hello," is transformed into a spectrogram, highlighting its energy patterns and frequencies for further processing.

An audio recording raw audio waveform (left) and mel spectrogram (right)

Source: NVIDIA, Essential Guide to Automatic Speech Recognition Technology

Acoustic Modeling (HMM/GMM): Identifying Phonemes

Acoustic models convert numerical features into phonemes—the basic sound units that form words. This is achieved using Hidden Markov Models (HMMs) for sequential alignment and Gaussian Mixture Models (GMMs) to account for variations like accents and speaking styles.

Example: For "Hello," the model identifies phonemes as:

/h/ for the initial sound

/ɛ/ for the vowel

/l/ for the "l" sound

/oʊ/ for the final vowel sound

Forced Alignment: In training, predefined word boundaries help align phonemes accurately to their corresponding audio segments, ensuring precision.

Language Modeling: Predicting Word Sequences

Phonemes alone don’t convey meaning, so a Language Model predicts the most likely sequence of words based on context. Statistical models like N-grams or advanced neural networks evaluate probabilities of word combinations.

Example of Neural Networks:

Input: "I would like a cup of..."

Prediction: Likely completions include "coffee," "tea," or "water" based on context.

Pronunciation Modeling (Lexicon): Mapping Words to Sounds

A pronunciation dictionary bridges the gap between written text and its phonetic representation, ensuring the ASR system understands how words sound.

Example: For the word "cat," the lexicon provides the phonetic transcription /k/ /æ/ /t/.

Decoding: Combining Models for Transcription

The decoder integrates information from the acoustic model, language model, and lexicon to produce the most accurate transcription.

Example:

Input: "The quick brown fox jumps over the lazy dog."

Process:

Acoustic Model: Identifies phonemes.

Language Model: Predicts the most likely sequence of words.

Lexicon: Maps phonemes to their corresponding words.

Output: "The quick brown fox jumps over the lazy dog."

Downsides of the Traditional Hybrid Approach

- Lower Accuracy: Struggles with noisy or overlapping speech environments.

- Complexity: Requires the integration and fine-tuning of multiple independent components.

- Data Dependency: Relies heavily on forced-aligned data, which is costly and time-consuming to obtain.

- Limited Adaptability: Retraining is often necessary for new languages, accents, or domains, reducing flexibility.

In summary, the hybrid approach revolutionized modern ASR systems but is constrained by its reliance on complex, multi-component pipelines, which hinder both accuracy and adaptability. While effective in controlled environments, it struggles in real-world scenarios, highlighting the need for more streamlined solutions such as end-to-end deep learning models.

2. End-to-End Deep Learning Approach

The modern approach to Automatic Speech Recognition (ASR) replaces the traditional multi-component architecture with a streamlined neural network. This single model directly maps acoustic features to text, eliminating the need for separate acoustic, pronunciation, and language models. The result is a simpler, more accurate, and adaptable system.

How It Works:

Acoustic Feature Extraction

As in the hybrid approach, raw audio signals are processed to extract relevant features, such as Mel-Frequency Cepstral Coefficients (MFCCs) or spectrograms. These features serve as the input to the deep learning model.

Deep Learning Model

A single neural network learns the mapping from acoustic features to text. Common architectures include:

- Connectionist Temporal Classification (CTC): Aligns speech and text sequences without requiring pre-aligned data, making it effective for variable-length input and output.

- Listen, Attend, and Spell (LAS): Utilizes attention mechanisms to focus on relevant parts of the audio, improving transcription accuracy.

- Recurrent Neural Network Transducer (RNN-T): Designed for real-time applications, it provides continuous, frame-by-frame recognition.

(Optional) Language Model Integration

While not required for basic functionality, an optional language model can enhance fluency and accuracy, especially for domain-specific or complex tasks. Modern implementations may use transformers for this purpose.

Advantages of End-to-End ASR

- Higher Accuracy: End-to-end models outperform traditional systems, especially in noisy, conversational, or accented speech scenarios. Their ability to learn directly from raw data reduces errors associated with intermediate processing steps.

- Simplified Training and Development: Training a single model simplifies the pipeline, reducing the complexity and time needed for development. This approach eliminates the need for separate tuning of multiple components.

- Adaptability: End-to-end models are more adaptable to new languages, dialects, or domains. With sufficient training data, they can quickly generalize to new tasks and environments.

- Continuous Improvement: Advances in deep learning research, including innovations in architectures (e.g., Transformers) and optimization techniques, lead to ongoing improvements in accuracy, efficiency, and robustness.

Key Technologies Propelling Modern ASR

1. Deep Learning

Deep learning has become the cornerstone of modern ASR, powering advancements in both traditional hybrid and end-to-end systems. Various deep learning models, including Transformers, LSTMs, and CNNs, contribute to improved accuracy, noise robustness, and adaptability.

2. End-to-End ASR Systems

These systems streamline the ASR pipeline by directly mapping acoustic features to text, often eliminating the need for separate acoustic and language models. Architectures like Connectionist Temporal Classification (CTC), attention mechanisms, RNNs, and Transformers have enabled significant performance gains in diverse conditions.

3. Transformers in ASR

Transformers excel in sequence-to-sequence tasks due to their ability to capture long-range dependencies. This allows for more context-aware transcriptions, as demonstrated by their ability to differentiate between sentences like "I saw the bark of the dog" and "I saw the bark of the tree."

4. Natural Language Processing (NLP)

NLP techniques are essential for enhancing ASR systems, particularly in applications that require natural language understanding and generation.

- NLU helps ASR systems interpret the meaning behind transcriptions, enabling more natural interactions in applications like voice assistants.

- NLG facilitates coherent and contextually appropriate responses, making ASR systems more conversational.

- Further NLP advancements, including named entity recognition, sentiment analysis, and dialogue management, are driving continuous improvement in ASR applications.

Example Comparison: Traditional vs. End-to-End ASR

1. Traditional Approach:

Input: "Can you recommend a nearby restaurant?"

Components:

Acoustic Model: Converts audio signals to phonemes.

Pronunciation Model: Maps phonemes to words.

Language Model: Predicts the most likely word sequence.

Output: Errors can occur due to misalignment or lack of context, leading to outputs like "Can you recommend a nearly restaurant?"

2. End-to-End Approach:

Input: "Can you recommend a nearby restaurant?"

Process: A single neural network maps the audio directly to text.

Output: Accurate transcription, such as "Can you recommend a nearby restaurant?"

Advantages: Higher accuracy, better noise handling, and more natural language understanding.

ASR in Action: Real-World Applications

ASR is no longer a futuristic concept; it's transforming industries and enhancing our daily lives in countless ways.

1. Contact Centers and Customer Service

- Real-time Call Transcription: ASR enables real-time transcription of customer interactions, providing valuable insights for agents and supervisors.

- Automated Customer Service: ASR powers interactive voice response (IVR) systems, allowing customers to navigate menus and access information using voice commands.

- Speech to Text Analytics: By analyzing customer conversations, ASR can identify trends, sentiment, and areas for improvement in customer service.

Discover how Automatic Speech Recognition and Call Analytics empower Contact Center as a Service (CCaaS) providers by downloading our in-depth Contact Center White Paper.

2. Media Monitoring

ASR is revolutionizing how organizations monitor media content, delivering unparalleled efficiency and accuracy in processing large volumes of information through speech-to-text APIs.

- Real-Time Content Analysis: ASR is transforming broadcast monitoring by converting audio from news broadcasts, podcasts, and videos into text, enabling rapid identification of key topics and trends.

- Sentiment and Brand Monitoring: By analyzing media content, ASR helps track public sentiment and measure the impact of marketing campaigns or public statements.

- Accessibility and Indexing: Facilitates closed captioning and makes vast amounts of media content searchable, improving accessibility for audiences and researchers.

🎥 Explore how organizations are enhancing media monitoring with speech-to-text technology to transform content analysis.

3. Virtual Assistants

- Natural Language Conversations: Virtual assistants like Alexa, Siri, and Google Assistant rely on ASR to understand our commands and respond in a natural way.

- Hands-Free Control: ASR enables hands-free interaction with devices, improving accessibility and convenience.

- Personalized Experiences: By analyzing our speech patterns and preferences, ASR can help personalize our interactions with virtual assistants.

4. Healthcare

- Medical Transcription: ASR automates the transcription of patient notes and reports, freeing up valuable time for medical professionals.

- Clinical Documentation Improvement: ASR can help ensure the accuracy and completeness of medical records.

- Privacy-Preserving Machine Learning: Researchers are developing techniques to ensure patient privacy while leveraging ASR for healthcare applications.

Learn how Emerald Healthcare transformed their clinical documentation process using speech-to-text technology.

5. Finance

- Compliance and Risk Management: ASR can help financial institutions monitor conversations for compliance with regulations.

- Fraud Detection: By analyzing speech patterns and language, ASR can help identify potential fraud.

- Trader Voice Analysis: ASR can be used to analyze trader conversations, providing insights into market sentiment and trading strategies.

ASR Challenges to Overcome

While ASR has made remarkable progress, there are still challenges to overcome.

1. Privacy and Security:

Data Protection: As ASR systems process sensitive information, ensuring data privacy and security is paramount.

Responsible AI: Ethical considerations around data usage, bias, and transparency need to be addressed.

2. Adversarial Attacks:

Robustness and Security: Researchers are actively developing defenses against adversarial attacks that aim to manipulate ASR systems.

3. Accuracy in Low-Resource Settings:

Accents and Dialects: ASR systems often struggle with accents and dialects not well-represented in training data.

Domain-Specific Vocabulary: Specialized language models are needed for accurate recognition in specific domains like healthcare or law.

4. Human Oversight:

Accountability and Accuracy: In critical domains, human oversight is still necessary to ensure the accuracy and reliability of ASR output.

The Future of ASR: Trends and Predictions

The field of Automatic Speech Recognition (ASR) is rapidly evolving, with researchers and developers working on innovative approaches to enhance accuracy, adaptability, and inclusivity. Here’s what the future holds:

1. Advanced Neural Architectures

Cutting-edge neural network architectures, such as Transformer models and hybrid approaches, will continue to redefine ASR performance. These architectures are designed to better capture the complexities and nuances of speech, including subtle intonations and variations in speaking styles.

Example: Models like Whisper and RNN-T are being enhanced to handle diverse acoustic environments and linguistic patterns.

2. Contextual Feature Extraction

Future ASR systems will incorporate techniques that analyze surrounding acoustic and linguistic contexts to improve feature extraction and recognition accuracy. This allows for better understanding of ambiguous speech or overlapping audio signals.

Example: In a noisy environment, contextual extraction might differentiate "I'm going to the bank" (financial institution) from "I'm going to the bank" (riverbank) based on prior and subsequent words.

3. Low-Latency ASR

Low-latency ASR will become a standard for applications requiring real-time interaction, such as live captioning, video conferencing, and simultaneous translation. Advances in algorithms and processing techniques will reduce the lag between spoken input and text output.

Example: Future systems might achieve sub-100ms latency, enabling seamless communication during live events or collaborative meetings.

4. Multilingual and Cross-Lingual Support

ASR systems will increasingly support a broader range of languages and dialects, breaking down communication barriers worldwide. Enhanced cross-lingual capabilities will allow users to switch languages seamlessly within the same conversation.

Example: An ASR system could recognize and transcribe a conversation in English, Spanish, and Mandarin simultaneously, fostering smoother global communication.

5. Privacy and Responsible AI

Ethical development will play a crucial role in the future of ASR. Privacy-preserving technologies like federated learning and fairness guidelines will ensure secure, unbiased, and transparent ASR systems.

Example: Systems may operate fully on-device, ensuring that no audio data leaves the user's device, thus addressing privacy concerns in sensitive industries like healthcare and legal services.

6. Robust Models for Limited Resources

Addressing the digital divide, researchers will focus on improving ASR systems for low-resource settings, including less common languages, regional accents, and dialects. Robust models will also handle challenging environments like background noise or overlapping speakers.

Example: ASR systems could provide reliable transcription for underrepresented languages like Quechua or Wolof, as well as handle strong accents in languages like English and French.

7. Integration with Broader AI Systems

ASR will integrate more deeply with Natural Language Processing (NLP) and Conversational AI systems to enable context-aware dialogue and actions. This will enhance the capabilities of virtual assistants, customer support systems, and real-time analytics tools.

Example: Virtual assistants like Alexa or Google Assistant could recognize not just commands but also the emotional tone or urgency in the user’s voice, providing a more personalized interaction.

What Type of ASR Solution Is Right For You?

When it comes to Automatic Speech Recognition (ASR), there are different types available, each designed to cater to specific needs and use cases. Choosing the right type of ASR depends on factors like application, environment, and the desired outcome. Here are some of the most common types of ASR systems you can use:

- Real-Time ASR: This type of ASR processes audio input in real-time, making it ideal for live applications. It is used in scenarios like virtual assistants, live transcription, customer service, and video conferencing. Real-time ASR must be quick and efficient, offering minimal latency.

- Pre-Recorded ASR: This type processes pre-recorded audio or video files to generate transcripts. It's highly effective for applications where immediate results are not necessary, such as in media transcription, customer call analysis, or medical documentation. Pre-recorded ASR typically offers more accuracy since it allows for deeper processing.

- Cloud-Based ASR: Cloud-based ASR systems operate on a cloud server, providing the benefits of high scalability and powerful processing. They are particularly useful for large-scale applications where numerous users need access, like customer support centers or digital media platforms.

- On-Premise ASR: These systems are hosted locally, offering more control over data and security. On-premise ASR is preferred by industries like healthcare and finance, where privacy and compliance are critical concerns.

- End-to-End ASR Systems: End-to-end ASR systems integrate all stages of the speech recognition pipeline into a single model. They use deep learning models, such as Transformers, to directly convert raw audio into text without the need for distinct acoustic, language, or pronunciation models. These systems are known for their scalability and simplicity.

- Customized ASR: Some ASR solutions are tailored for specific industries or needs, incorporating specialized vocabulary or training to handle domain-specific jargon. These customized solutions are common in legal, medical, or technical fields where standard ASR might not provide sufficient accuracy.

How to Choose the Best ASR?

Exploring the best speech-to-text API for your needs can be challenging due to the wide range of options available, including cloud-based solutions, specialized APIs, and open-source speech-to-text platforms. Here are some key factors to consider that will help you determine the right ASR solution for your use case:

Purpose and Use Case: Determine what you need the ASR system for. Is it for live events or pre-recorded content? Real-time ASR is best for scenarios that require instantaneous responses, such as virtual assistants or customer support, while pre-recorded ASR works well for media transcription and analysis.

Accuracy Requirements: Different ASR systems have varying levels of accuracy depending on their training data, language models, and use of advanced technologies like deep learning. For highly specialized industries (like healthcare or finance), opting for a customized ASR with domain-specific vocabulary might be necessary to achieve the required accuracy. Additionally, consider the Word Error Rate (WER), which is a key metric used to evaluate the accuracy of ASR systems. WER measures the percentage of words that are incorrectly recognized by the system, and a lower WER indicates better performance.

Deployment Type: Consider whether a cloud-based or on-premise solution works best for you. Cloud-based ASR is advantageous if you need scalability and remote accessibility, while on-premise solutions provide greater data privacy and security, which is crucial for compliance-heavy sectors.

Scalability: If your use case involves handling a large volume of requests or scaling to accommodate future growth, ensure the ASR system can handle that. Cloud-based and end-to-end ASR models are typically well-suited for scaling.

Supported Languages and Accents: Make sure the ASR system supports the languages and accents you need. Multilingual support is important for businesses that serve a global audience, while accent recognition is key to improving accuracy for diverse user bases. Some advanced ASR systems also offer Speaker Diarization, which is the ability to distinguish between multiple speakers in a conversation, making it particularly useful for meetings, interviews, or call center recordings.

Integration Capabilities: Evaluate how easily the ASR system integrates with your existing tech stack. If you need to incorporate speech recognition into your applications, services, or tools, choose a solution that provides seamless integration via APIs and has flexible customization options.

Cost: ASR systems can vary significantly in terms of cost. Some cloud-based ASR providers offer pay-as-you-go pricing, which is suitable for occasional use, while customized or on-premise ASR solutions might involve higher initial setup costs. Ensure the pricing model aligns with your budget and expected usage.

Data Privacy and Compliance: If your organization handles sensitive data, make sure the ASR solution complies with industry standards for privacy and data protection, such as GDPR or HIPAA. On-premise solutions are often preferred for greater control over data handling.

Advanced Features: Consider any additional features that might benefit your use case. For example, Sentiment Analysis is a valuable feature that can analyze the emotional tone of spoken words, providing insights into customer satisfaction or speaker mood. This can be particularly helpful in customer service, sales, and other applications where understanding sentiment is crucial.

By considering these factors, you can choose the ASR system that best fits your needs, providing efficient, accurate, and reliable speech recognition that enhances your workflows and meets your specific goals.

Conclusion

Automatic Speech Recognition (ASR) is revolutionizing how we communicate and operate, driving efficiency, accuracy, and innovation across industries. Whether it’s real-time transcription, personalized virtual assistants, or specialized applications in healthcare and finance, ASR is shaping the future of human-machine interaction. Choosing the right ASR solution means embracing technology that’s reliable, scalable, and tailored to your needs.

At Vatis Tech, we’re at the forefront of ASR innovation, offering advanced solutions tailored to your needs. Our state-of-the-art technologies deliver accurate, secure, and scalable speech recognition that adapts to your unique challenges.

Ready to see the difference for yourself? Head to our No-Code Playground and try it for free today!

👉https://vatis.tech/app/register

Let’s explore the future of real-time transcription together!. Don’t just keep up with the future—lead it.

.avif)