TABLE OF CONTENTS

Experience the Future of Speech Recognition Today

Try Vatis now, no credit card required.

Introduction

In 2025, audio data - customer calls, interviews, podcasts, webinars, meetings, and more - is a goldmine waiting to be tapped. Speech-to-text sentiment analysis APIs transform this raw input into actionable insights, revealing not just *what* people say but *how they feel*.

This guide dives deep into the best APIs for analyzing sentiment in transcribed audio, offering technical comparisons, niche applications, and expert tips to help you choose the perfect solution.

What Is Sentiment Analysis? (And Why Is It a Game-Changer For Audio/Video?)

Sentiment analysis, also known as opinion mining, is a powerful Natural Language Processing (NLP) technique. It automatically determines the emotional tone – positive, negative, or neutral - expressed in a piece of text. When applied to transcribed speech from audio and video, it becomes an incredibly versatile tool, allowing you to understand the voice of the customer, the mood of your audience, and the effectiveness of your communication at scale.

Examples of Sentiment in Transcribed Speech

Consider these transcribed phrases (imagine them from a customer service call, a meeting, or a podcast):

"I'm absolutely thrilled with your service!" (Clearly positive)

"This is completely unacceptable! I'm furious!" (Clearly negative)

"It's okay, I guess... not the best, not the worst." (Neutral/Weakly positive)

"The agent told me to reboot the router, but I'm still having the same problem." (Negative, identifies a problem and a failed solution).

"I appreciate the quick response, but the issue needs escalating." (Mixed: Positive on the response, but negative on the unresolved issue).

A good sentiment analysis system, optimized for speech-to-text data, can distinguish these nuances, provide a sentiment score (or probability distribution), and even identify specific emotions (joy, anger, frustration, sadness) and the aspects of the conversation driving the sentiment (e.g., "wait time," "agent helpfulness," "product features," "audio quality").

Why Sentiment Analysis for Speech-to-Text Matters

Key Benefits Across Industries

Sentiment analysis applied to audio unlocks a new dimension of understanding. Beyond text-based reviews, it captures tone, emotion, and intent from spoken words. Key benefits include:

- Customer Insights at Scale: Analyze thousands of call center interactions to spot trends.

- Brand Sentiment Tracking: Monitor podcasts, webinars, any audio/video media, for real-time reputation insights.

- Content Optimization: Fine-tune podcasts or videos based on listener reactions.

- Research Precision: Extract nuanced opinions from focus groups or interviews.

- Operational Efficiency: Summarize meetings and flag emotional hotspots for follow-up.

Challenges Unique to Spoken Language

Unlike text-only sentiment tools, speech-to-text APIs must handle the complexity of spoken language - background noise, accents, and overlapping voices - making the right choice critical.

How Does Sentiment Analysis Work With Speech-To-Text?

- Rule-Based Systems: These use predefined lists of words (lexicons) that have sentiment scores and follow grammatical rules. For example, words like "great" or "excellent" indicate positivity, while "bad" or "terrible" signal negativity.

- Machine Learning Systems: Algorithms like Naive Bayes, Support Vector Machines, and Deep Neural Networks are trained on large datasets of labeled text, learning patterns to accurately predict sentiment. For example, training a model on customer reviews helps it recognize and classify future feedback.

- Hybrid Systems: Combine the lexicon approach with machine learning methods for improved accuracy.

- Deep Learning Systems: Use advanced models such as transformers (e.g., GPT models) for sophisticated context understanding, enabling better detection of nuanced emotions and sarcasm.

The Practical Process

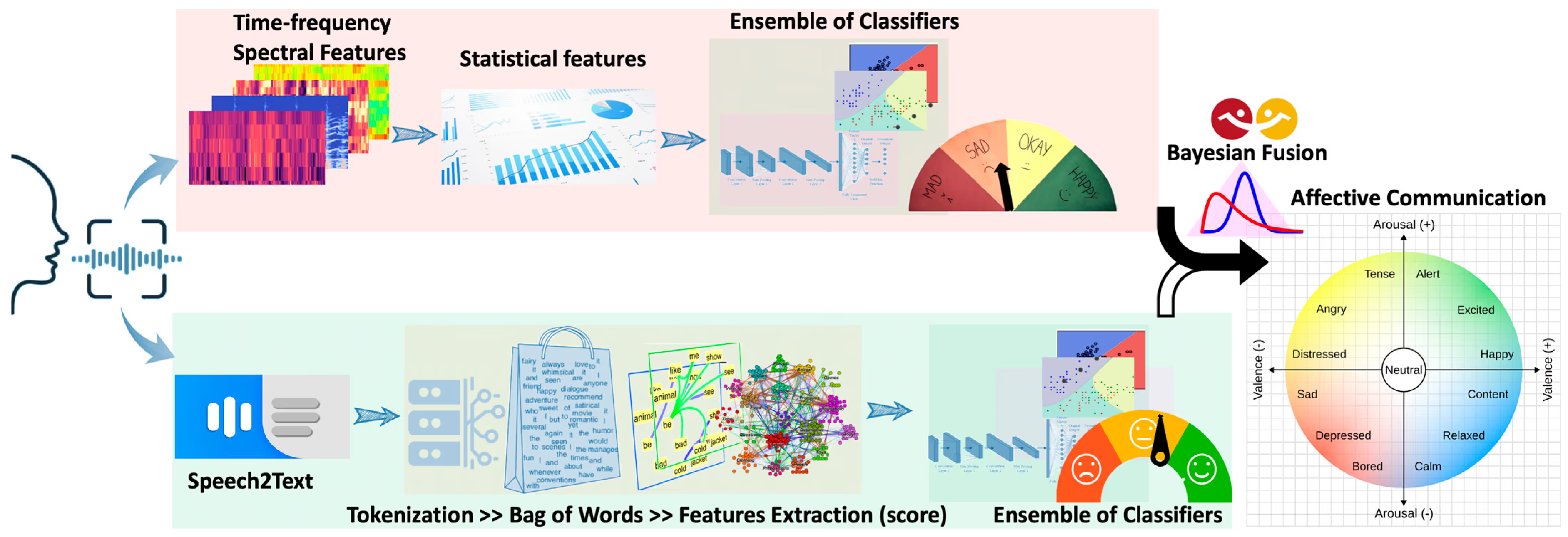

The sentiment analysis workflow typically includes the following steps:

- Text Preprocessing: Cleaning up the transcribed text by removing irrelevant characters, handling punctuation, and standardizing text (like converting it to lowercase).

- Feature Extraction: Transforming the text into numerical data the model can interpret. For instance, turning words into embeddings that capture meaning.

- Sentiment Classification: Applying a trained model or algorithm to determine the sentiment by evaluating the numerical data. Models recognize patterns associated with different sentiments based on previous training.

- Output: Providing clear results such as sentiment labels (positive, neutral, negative), scores, or probability distributions

Source: Multimodal Affective Communication Analysis: Fusing Speech Emotion and Text Sentiment Using Machine Learning

Example Output with Score Interpretation

- Input Audio: "The new software update is fantastic; it improved my workflow immensely!"

- Transcribed Text: "the new software update is fantastic it improved my workflow immensely"

- Sentiment Classification: Positive (Score: 0.93)

In this example, the classification model identified words like "fantastic" and "improved," which are strongly associated with positive sentiments. The overall context of improvement and satisfaction led the model to confidently classify the sentiment as positive.

The sentiment score (0.93) is calculated based on the probability distribution generated by the model. The model analyzes each word and its context within the sentence, assigning probabilities to possible sentiment labels. The score (0.93) represents the model's confidence that the text expresses a positive sentiment.

Crucial considerations for Speech-to-Text Sentiment Analysis

- Transcription Accuracy (WER): Word Error Rate (WER) benchmarks matter - aim for <10% on clean audio, <20% on noisy data.

- Conversational Nuance: Handling fillers ("uh," "um"), interruptions, and slang is non-negotiable.

- Speaker Diarization: Multi-speaker audio requires precise separation (e.g., 95%+ accuracy on distinct voices).

- Prosodic Analysis: Emerging APIs leverage pitch, tempo, and volume for richer sentiment detection - think frustration in a raised voice.

Advanced Features to Demand in 2025

Basic positive/negative scoring is table stakes. Look for these cutting-edge capabilities:

- Aspect-Based Sentiment Analysis (ABSA): Ties sentiment to specific topics (e.g., “The app crashes often” = negative on reliability).

- Granular Emotion Detection: Beyond anger or joy, detect subtleties like sarcasm or hesitation.

- Intent Classification: Identifies goals (e.g., “I need a refund” = complaint).

- Contextual Topic Modeling: Clusters discussions into themes (e.g., pricing, support).

- Audio Summarization: Distills 30-minute calls into 3-sentence takeaways.

- Privacy Compliance: Redacts PII (names, credit cards) per GDPR standards.

- Multilingual Processing: Supports 50+ languages with dialect-specific models.

Top Speech-to-Text Sentiment Analysis APIs in 2025

Here’s a detailed comparison based on performance, features, and fit:

Continue Reading

If you’re short on time, let Vatis handle the time part. You just press record.

…or you could keep copying, pasting, editing, rewriting…

.avif)